Are you curious about how different parts of the world are handling AI? With technology advancing faster than ever, many are asking how governments are keeping up.

AI regulations are being developed in Asia, the US, and Europe, but they all take different approaches. Each region has its way of thinking about safety, privacy, and fairness when it comes to artificial intelligence.

But what exactly are these regulations, and why do they matter?

What is AI Regulation?

AI regulation is the rules made by governments to control how artificial intelligence (AI) is created and used. These rules ensure AI is safe, and fair, and doesn’t harm people. They focus on privacy, security, and ethical use. Different countries have different rules based on their priorities.

Current AI Regulations

Policymakers in the U.S. and Europe are focused on creating rules for AI that balance safety with innovation, but they’re taking different approaches.

EU AI Regulations

- Europe is ahead in writing laws to control AI. In May 2024, the EU Council approved the EU AI Act, after the European Parliament agreed to it in March.

- This is the world’s first law to regulate general-purpose AI and will apply to all companies operating in the 27 EU countries, even if they’re based elsewhere. While the law will fully take effect in May 2026, parts of it—like rules about privacy and AI’s use of facial images—will start in December 2024.

U.S. AI Regulations

- In the U.S., AI regulation is moving slower. Congress hasn’t passed any major laws yet, but in October 2023, President Biden signed an Executive Order to guide federal agencies on using AI safely. It encourages innovation while trying to avoid bias or security issues.

- The order lists over 100 tasks for agencies, including making it easier for people working with AI to get visas and creating research institutes.

AI Regulation in ASEAN

- In Southeast Asia, AI regulation is also making progress. The Association of Southeast Asian Nations (ASEAN), which includes 10 countries like Singapore, Malaysia, and Thailand, introduced a guide in February 2024.

- This guide helps organizations and governments design, develop, and use AI responsibly. It takes a flexible, voluntary approach to regulating AI.

- However, the ASEAN guide mainly covers traditional AI, not generative AI, and is similar to Singapore’s AI governance framework. It offers recommendations both for individual countries and the region as a whole. It encourages companies to respect cultural differences but doesn’t set strict rules like the EU AI Act.

- Most countries in Asia, except China, have taken a lighter approach to AI regulation, aiming to support the growth of the AI industry. Still, some, like Vietnam and South Korea, are starting to consider stricter regulations. More countries may follow once they see how the EU’s AI rules work out.

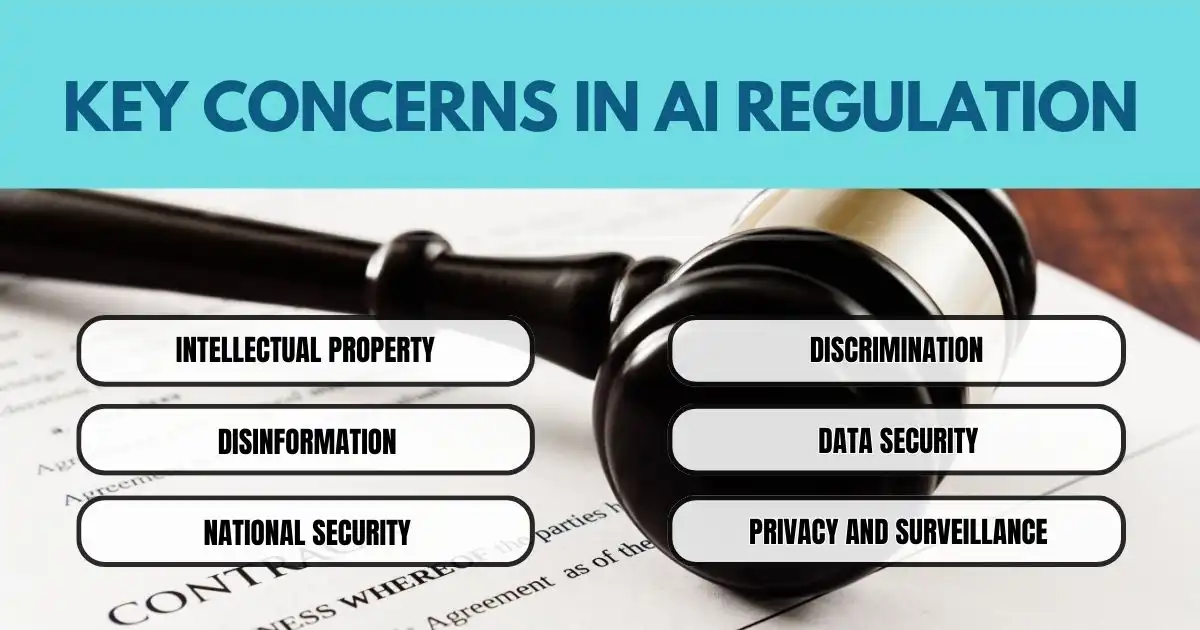

Key Concerns in AI Regulation

The accelerating development of artificial intelligence (AI) has sparked discussions about when and how it should be regulated. Experts believe AI will affect many industries, but there are concerns about its potential risks. Some of the main issues include:

- Intellectual Property – AI systems may use copyrighted materials for training, leading to lawsuits and questions about who owns AI-generated content.

- Disinformation – AI can create fake media, like deepfakes, spreading false information and causing issues like election fraud and financial scams.

- National Security – AI could be used by bad actors, such as terrorists, to threaten national security or even develop dangerous weapons.

- Discrimination – AI systems can be biased based on the data they are trained with, leading to unfair outcomes for certain groups of people.

- Data Security – AI relies on large datasets, often containing sensitive information like health or financial records, which can be vulnerable to cyberattacks.

- Privacy and Surveillance – AI-powered tools, like facial recognition and location tracking, raise concerns about people’s privacy and civil liberties.

Why AI Regulation is Challenging

While there are rules worldwide to protect data privacy, regulating AI isn’t as easy. Here’s why:

- Global Reach – AI is used worldwide, which leads to challenges when different countries have different laws.

- No Universal Standards – There aren’t global standards for testing and certifying AI systems.

- Fast Evolution – AI technology is advancing quickly, often faster than governments can update regulations.

- Complexity – AI systems are diverse and complicated, making it hard to create one-size-fits-all rules.

Pros and Cons of Regulating AI

Regulating AI has both upsides and downsides, which shows how complex the issue is.

Pros

- More Transparency – Regulations can make AI more transparent by requiring companies to explain how their systems work, especially with issues like the use of copyrighted material.

- Better Data Protection – AI regulations can help protect people’s data, giving users more control and increasing security.

- Fighting Bias – Rules can help reduce bias in AI, leading to fairer and more equal results.

Cons

- Slows Innovation – Too many regulations could slow research and development, making it harder to create new AI technologies.

- Higher Costs – Businesses, especially start-ups, may face high costs to meet new regulations.

- Outdated Rules – As AI evolves, laws may need to be updated, making it difficult for regulations to keep up with new developments.

What Will We Do Next?

AI is changing fast, and how we choose to control it now will decide the kind of world we live in tomorrow. The rules being made in Asia, the US, and Europe aren’t just about technology—they’re about making sure AI is fair and safe and respects our privacy. But if we wait too long or create rules that slow down progress, we might miss out on its benefits.

So, what will we do? Will we find a way to balance safety and innovation, or let fear hold us back?

The decisions we make today about AI are important for everyone. Now is the time to think about what kind of future we want and take steps to make sure AI works for all of us. Let’s make choices that help AI improve our lives while keeping us safe.

FAQS

Why are rules-based approaches to AI broadly considered to be a failure?

Rules-based approaches are too rigid for complex, real-world AI applications, leading to their failure. The EU AI regulation instead focuses on dynamic, risk-based approaches that better suit evolving AI technologies.

How does the competition defend the AI Act after recent criticisms?

The competition defends the AI Act by highlighting its role in balancing innovation and regulation. The EU AI regulation ensures ethical AI development while preserving Europe’s competitive edge in global markets.

What are the key concerns of executives regarding the European AI Act?

Executives worry that the European AI Act, part of the EU AI regulation, may raise compliance costs. However, others see it as fostering trust, which can benefit businesses in the long term.

How does the EU Asian AI Act differ from other AI regulations?

The EU Asian AI Act focuses on harmonizing standards between Europe and Asia. Unlike the internal EU AI regulation, it promotes cross-border AI development and deployment cooperation.