“Technology is best when it brings people together,” said Matt Mullenweg, the co-founder of WordPress.

But what happens when technology creates more questions than answers?

Generative AI is one of the most exciting tools today, changing how we create art, write stories, and solve problems. However, with great power comes great responsibility—and challenges.

From ethical dilemmas to technical limitations, generative AI isn’t as simple as it seems. These challenges affect not only tech companies but also schools, businesses, and society as a whole. Understanding these issues helps us use AI wisely and prepare for its impact on the future.

What is Generative AI?

Generative AI is a kind of artificial intelligence that makes new things like text, pictures, music, or code. It learns from a lot of examples and uses that knowledge to create something similar. Unlike regular AI, which sorts information or gives answers, generative AI creates original content. For example, GPT writes stories, DALL-E makes images, and music tools create songs.

Generative AI is used in areas like art, writing, and research. It helps by saving time, giving new ideas, and making creativity easier. It is changing how people create and work.

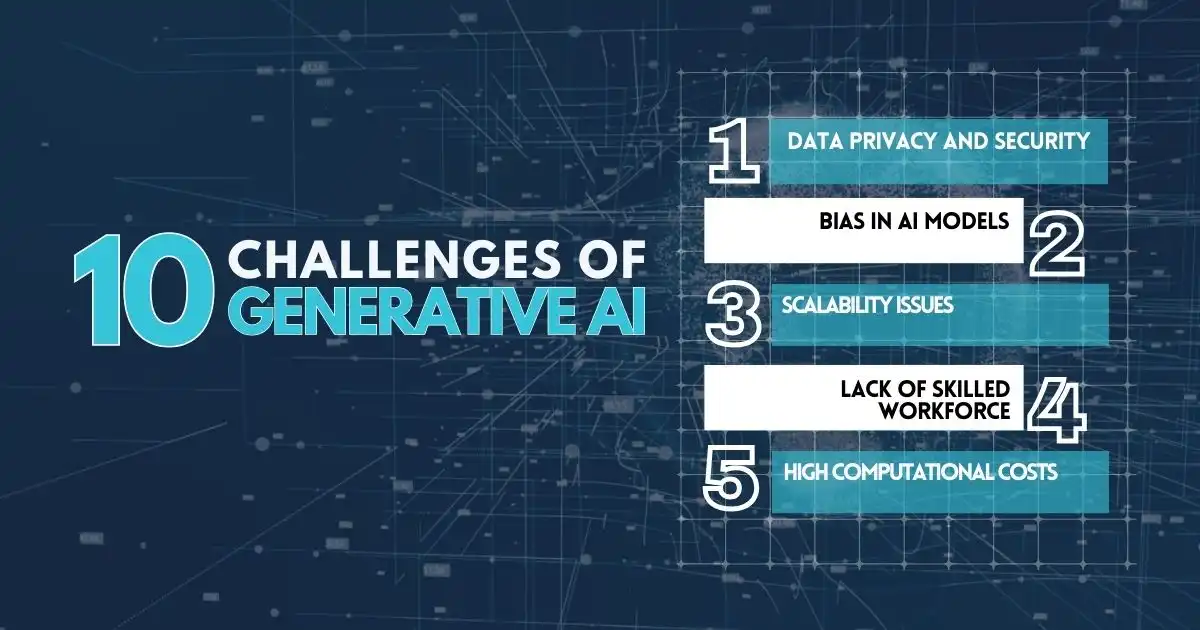

10 Challenges of Generative AI

Generative AI is becoming a powerful tool in many industries, but it comes with challenges that need attention. To use it effectively, we must address these issues and work towards solutions.

Here are the ten challenges and ways to manage them:

1. Data Privacy and Security

Generative AI relies on vast datasets, which can create risks around data privacy and security. Sensitive personal or corporate data might be exposed or misused during the AI training process.

Implement strict data anonymization techniques, encrypt sensitive data, and comply with privacy regulations such as GDPR. Regular audits can ensure that data privacy is maintained throughout the AI lifecycle.

2. Bias in AI Models

AI systems can inherit biases from the data they are trained on. If the training data contains biases, these biases can be reflected in AI outputs, leading to unfair or discriminatory results.

Use diverse and representative datasets to train AI models. Regularly audit AI systems to check for bias and involve ethical experts to ensure fairness and accountability.

3. Scalability Issues

Scaling generative AI to handle large data volumes or complex tasks can be difficult and expensive, especially for smaller businesses.

Use cloud-based AI platforms that offer scalable resources. Implement modular AI systems that can be expanded as the organization’s needs grow, making scaling more affordable and manageable.

4. Lack of Skilled Workforce

There is a shortage of professionals with expertise in AI and machine learning, which slows down the adoption and effective use of generative AI.

Invest in training programs to upskill employees. Partner with universities or AI training centers to develop specialized programs. Hiring AI experts or collaborating with AI firms can help fill knowledge gaps.

5. High Computational Costs

Training and running generative AI models require significant computational power, resulting in high costs, especially for smaller organizations with limited budgets.

Optimize AI models to be more resource-efficient. Leverage cloud computing services and AI-as-a-Service (AIaaS) solutions to reduce infrastructure costs. Explore energy-efficient hardware and algorithms to further minimize expenses.

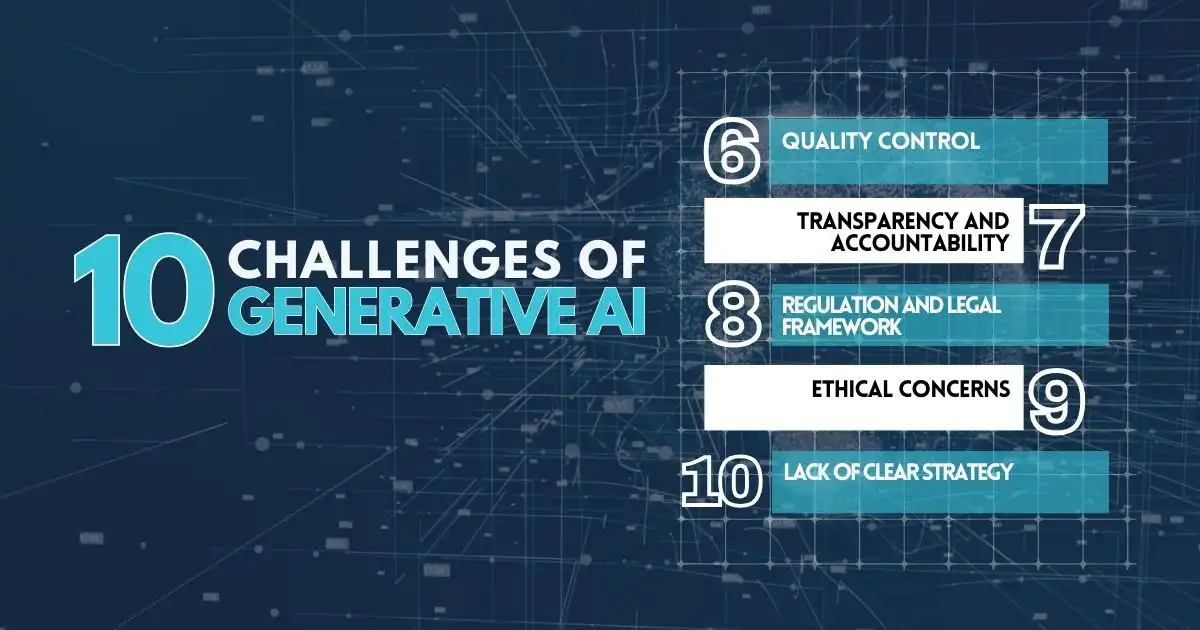

6. Quality Control

Ensuring the accuracy and reliability of AI-generated content is challenging. AI models can sometimes produce errors, misleading information, or nonsensical outputs, which can reduce trust in the technology.

Implement quality control systems, including human review processes for AI outputs. Regularly update and improve AI models with fresh data to enhance accuracy and reduce errors.

7. Transparency and Accountability

Generative AI models can often work as “black boxes,” meaning it can be difficult to understand how they make decisions. This lack of transparency can create challenges, especially when AI outputs are questioned or criticized.

Increase transparency by making AI systems more explainable. Develop methods to trace and understand the reasoning behind AI-generated content. Clear documentation and accountability measures can help organizations stand by their AI models.

8. Regulation and Legal Framework

As generative AI grows in use, the legal implications become more complex. Questions about intellectual property, accountability, and misuse of AI-generated content need clear legal guidelines.

Governments and industry groups need to develop updated laws and regulations to address the unique challenges of generative AI. Businesses should stay informed about evolving regulations to ensure compliance and protect their interests.

9. Ethical Concerns

Generative AI can be misused to create deepfakes or spread misinformation, posing serious ethical challenges. AI-generated content might deceive people or harm reputations.

Additionally, develop clear ethical guidelines and enforce transparency by labeling AI-generated content. Technologies like deepfake detection tools can help flag harmful or misleading content.

10. Lack of Clear Strategy

Many organizations face challenges in implementing generative AI due to a lack of clear strategy or roadmap. Without a well-thought-out plan, AI adoption may fail to meet business goals or result in wasted resources.

Create a detailed, long-term roadmap for AI adoption. Set measurable goals, identify key use cases, and allocate resources wisely. Engage senior leadership in the planning process to ensure alignment with business objectives.

The Road Ahead for Generative AI

Generative AI is a powerful tool, but it’s not without its challenges. Issues like privacy concerns, bias in AI, and high costs are just some of the problems we need to solve.

Instead of avoiding these problems, we should face them head-on. How can we use AI safely and fairly? Are we ready to learn and adapt as this technology grows?

The future of AI depends on how well we handle these challenges. Now is the time to take action—make smart decisions and set clear rules to ensure AI is used responsibly. It’s up to all of us—developers, businesses, and users—to guide AI in the right direction and use this powerful tool responsibly and wisely.

FAQs

Which is one challenge in ensuring fairness in generative AI?

One challenge in ensuring fairness in generative AI is addressing bias in the AI models. Since AI systems learn from large datasets, any bias in the data can be reflected in the AI’s outputs, leading to unfair or discriminatory results. Ensuring fairness requires using diverse datasets and regular checks to reduce bias.

What challenges does generative AI face with respect to data?

Generative AI faces several challenges with respect to data, including data privacy and security risks. AI models need large amounts of data for training, and there is a concern that sensitive information could be exposed or misused during the process. Protecting this data is critical to maintaining privacy and trust.

One challenge in ensuring fairness in generative AI?

One challenge in ensuring fairness in generative AI is the potential for the AI to perpetuate existing biases in the training data. Also, this can lead to outputs that reinforce stereotypes or inequality. To ensure fairness, it’s important to use unbiased data and involve diverse perspectives in the AI development process.

What are generative AI challenges?

Generative AI faces several challenges, including issues with data privacy, high computational costs, lack of skilled workers, and ethical concerns. Additionally, ensuring fairness and reducing bias in AI outputs are significant challenges that need to be addressed to use this technology responsibly.